Machine Vision on the Arduino Portenta and Vision Shield LoRa - Part I

- Carlos Gustavo Merolla

- Mar 21, 2021

- 5 min read

Introduction

This post will focus on the combination of the Arduino PRO hardware, openMV IDE and edgeImpuse. If you read my review for the Arduino Portenta or the Introduction to AI on MCUs you will have an almost clear idea about what you can do, and probably, you will be wrong! The VisionShield through the openMV IDE will provide you with a lot of functionalities you can use to deal with lots of things. In my case, Big Cat Brother and Specially its Smart Cameras use some old computer vision techniques implemented in code, that is because sometimes you cannot afford the risk of false identifications. OpenMV has a lot of those functionalities we will explore in the future such as detecting features on images, detecting changes regarding backgrounds, circle detection and many more.

The goal

To illustrate the impressive power of these tools I selected a case of “Intruder detection” using edgeImpulse. The basic idea of this example is to detect if a room is empty or not, besides that, we will provide enough information to the AI model to understand what is inside the room, the possibilities are Jaguars or Lions. Don’t forget this site is all about Cats!

Edge Impulse will allow you to define and train models in a matter of minutes, once the model is trained you will simply deploy the trained model to the Portenta Vision Shield by copying a few downloaded files. As simple as that.

To do this, you will have to install the openMV firmware on the Arduino Portenta, the openMV IDE on your computer and sign up on the edge impulse site. With all that in place, we will follow this easy 11 steps procedure.

Gather sample data

Create a new project on edgeImpulse

Upload the data

Define and configure the model

Train the model

Validate the model

Reviews, corrections, re-training

Version control

Download the deployment

Copy the files to the Arduino Portenta

Run it

Gather sample data

In the ML world data is everything and it is not the exception in the tinyML world, I would say that good quality data is even more important on the tiny side of things. What we are going to do is to take pictures of an empty place from different angles, then we will place a Jaguar in the room and take more pictures and then the same with a lion. To do so, you can use this script on your openMV IDE to take each set of pictures, you can select the naming convention you want for them and the number of samples, you already know that the more samples you have the better.

# Sample Gathering

#

import sensor, image, pyb

RED_LED_PIN = 1

BLUE_LED_PIN = 3

sensor.reset()

sensor.set_pixformat(sensor.GRAYSCALE)

sensor.set_framesize(sensor.QVGA)

sensor.skip_frames(time = 2000) # Let new settings take affect.

pyb.LED(RED_LED_PIN).on()

sensor.skip_frames(time = 2000)

pyb.LED(RED_LED_PIN).off()

pyb.LED(BLUE_LED_PIN).on()

#take sequence of pictures, replace the prefix for each set

file_prefix= 'BKG_SAMPLE_'

#change the sample number

for i in range( 300) :

file_name= file_prefix + str( i ) + '.jpg'

print (file_name )

sensor.snapshot().save(file_name)

pyb.LED(BLUE_LED_PIN).off()

pyb.delay(1000)

Create a new project on edgeImpulse

Once you have your user on edgeImpuse click on your user icon and select a project or create a new one. If you are going to reuse a project remember to erase all the previous data you may have. If you want to start a project from scratch just select a name for it and that is it. We are not going to connect any device to edgeImpulse because those end-to-end processes are not implemented yet for the openMV environment.

Once you have your project created will you follow the workflow on the left side of the screen almost in order.

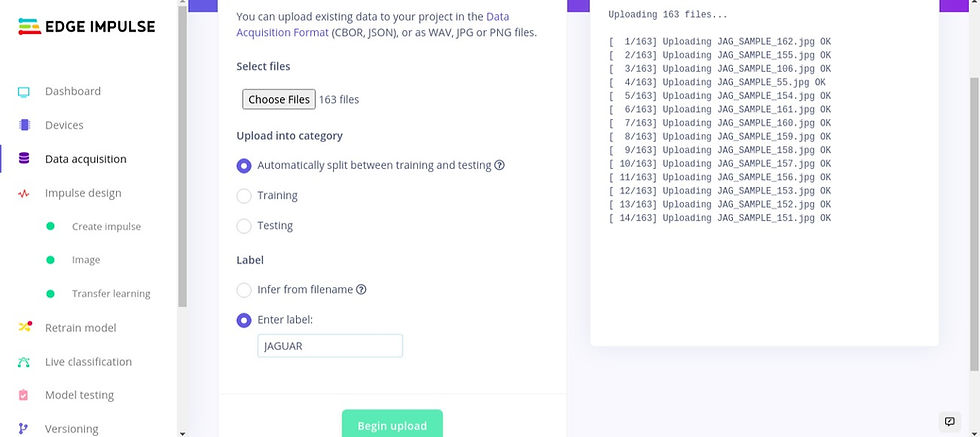

Upload data

edgeImpulse has many ways of getting the data to train the models, you can connect your device to EI, you can use a data forwarder to do so through a serial connection, etc. edgeImpulse is on preview mode on the support for the Arduino Portenta. If you want to use the Arduino firmware you will be able to gather samples directly from the board but that is not the case if you use the openMV IDE, so, you will provide the datasets by uploading the files you generated by using the script on the first step. The procedure is simple, just click the upload button on edgeImpuse and select your files and done.

No matter how you named the pictures you will have to specify a label for them the moment you proceed with the upload. Here is where the good and bad decisions start. Besides the labels, you will have to define two different sets for your sampling data, one set will be used for training the model and the other one to validate or test it. The moment you upload your samples, edgeImpulse could distribute automatically samples between the two sets. The ideal scenario is that you select different pictures for both sets. Keep in mind that one set will be used to train the model, the other one will be used to challenge it. In the real scenario, your trained model will deal with unexpected data and it could fail, so, it is a good idea to place some challenging pictures on the validation dataset.

You will repeat the upload process six times, there are three labels (EMPTY, LION and JAGUAR) and you will have to upload them in the training set and the validation set. If you are lazy, you can allow edgeImpulse to do the distribution for you.

Define and configure the model

In edgeImpulse terminology you need to define an impulse, this means to define an input block and a learning block. The input block will be used to connect the model to the data you provided and the learning block will be used to define the layers of the model.

As we are dealing with images you will select the image input, configure the color space (grayscale) in this case and its dimensions. As this is the input to the model, the dimension will have to be compatible with the ones expected by your model, in this case we are going to use a predefined “Transfer Learning” that uses a 96x96 pixels resolution. The images captured by the VisionShield will have a QVGA (320x240) resolution and they will be automatically resized. I use the QVGA resolution because the images can be used to train other models using other tools as well. When you are finished you will see something like the following image.

The learning block is where all the work happen. If you use the “easy” mode, that means using the graphical representations and edgeImpulse already made models, you will be using an optimized version of popular models. In the case of Transfer Learning it will use a mobileNet model. For this example I used this model because it is works pretty good with images. The other option you have is to define the network yourself (or edit the standard ones), by using this option you will be able to write a python script to include the layers you want. This “expert” mode will require some expertise in defining models with tensorFlow and its Keras engine.

In the easy mode you will just select the input block (images) and learning block (transfer Learning) and that is it. Make sure you selected the GRAYSCALE colorspace for the input block. As you advance in confidence you might want to change some of the model parameters including the confidence level wich is used to determine if an inference is undefined or the result can be trusted (the default value is 60%)

The next steps are where the action really begins, as blogs has a limited number of characters, I will post the details on the following post, see Part II !

Comments